January 29, 2026

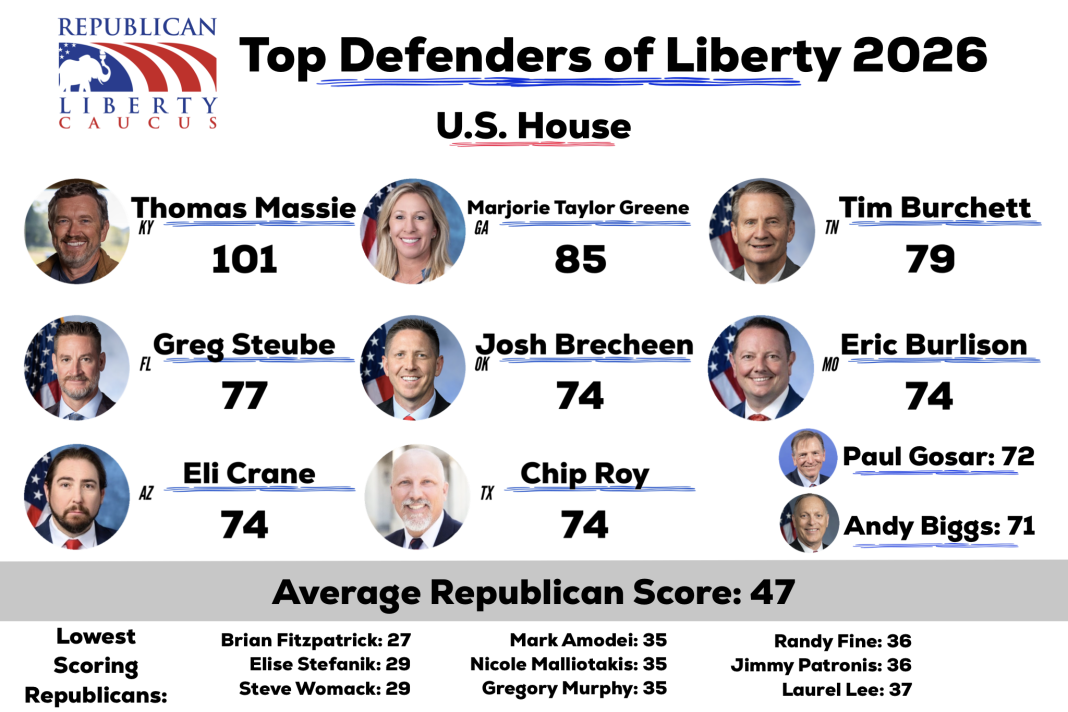

The Republican Liberty Caucus (RLC) has published its 2026 Liberty Index, its annual scorecard evaluating U.S. House Republicans on votes from 2025. Once again, Rep. Thomas Massie earned the highest score, emerging as a consistent champion of liberty amid widespread disappointment.

The Liberty Index scores Republican members of Congress on 20 key votes that either advanced or undermined core RLC principles: individual liberty, limited government, free markets, fiscal responsibility, and an America First, non-interventionist foreign policy. This year’s results were grim for most House Republicans. With full GOP control of the House, Senate, and White House in 2025, the party had a golden opportunity to deliver on long-standing promises of fiscal restraint. Instead, most members rubber-stamped spending bills that perpetuated Biden-era levels, further inflating the national debt—now exceeding $38 trillion.

While Republicans notched a few wins—such as the passage of H.J. Res. 20, H.J. Res. 35, and H.J. Res. 87, which repealed unnecessary EPA and Department of Energy regulations—these modest free market victories pale against the backdrop of trillions in continued spending.

As for the scoring, the Liberty Score is obtained by calculating the percentage that a Representative votes in line with the RLC. For example, the highest possible Liberty Score is 100. This is achieved if they voted with the RLC 100% of the time. Bonus points are awarded for cosponsoring certain bills that never received a floor vote. After factoring in the bonus points, it is possible for some Representatives to score above 100.

The 2026 Liberty Index delivers a clear message: House Republicans largely abandoned fiscal conservatism when it mattered most. Scores plummeted for many who had campaigned as budget hawks.

Most Republicans in Congress won’t be happy with their score on the 2026 Liberty Index, but the RLC isn’t here to make friends in Washington. We’re here to keep our politicians accountable for their actions.

True liberty demands more than occasional victories; it requires consistent courage against the status quo, as Massie demonstrates. Congressman Thomas Massie stood out as one of the few willing to vote no on reckless, wasteful appropriations. His near-perfect record cements himself as Congress’s most principled defender of liberty.

View the full 2026 Liberty Index scorecard below or on Scribd.